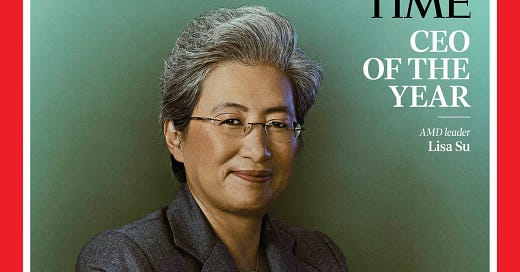

Profile of a Chip Industry Titan

Lisa Su, the AMD chief exec, is TIME's 2024 CEO of the Year. Plus: OpenAI's 'reasoning' AI tries and fails to build a birdhouse

I spent the morning after the U.S. Presidential election in a cab riding from San Francisco to Santa Clara, bleary eyed from lack of sleep, and trying to put politics to the back of my mind before an important interview. Luckily, when I arrived, my subject quickly confided that she had been up late too, having only turned off the television when it became clear Donald Trump would be returning to the White House.

So begins my latest profile — which recognizes Su as TIME’s 2024 CEO of the Year. It’s the story of how Su executed a remarkable turnaround of a business that many people had once written off. But it’s also a primer on the semiconductor industry as a whole — which, on the eve of Trump’s return to office, could not have risen to a position of higher geopolitical or economic importance. The success or failure of AI, the triumph of America or China, and the continued growth of most people’s retirement funds all hinges — to a greater or lesser extent — on the advanced chips that now power our world, and the companies that design and produce them. If you’re trying to understand that new reality, I could think of worse places to start than this story.

It’s the second year in a row that I’ve written this feature for TIME, following on from last year’s piece about Sam Altman which I co-authored with my former boss Naina Bajekal. Thankfully, AMD’s board didn’t fire and re-hire Su after our interview, which made the writing process for this one far more straightforward.

OpenAI’s Embarrassing Ad

If you’re a nerd, you might remember approximately fifty years ago when an ad for Google’s AI-augmented search wrongly attributed a discovery to the James Webb space telescope. The ad was seen as a major blunder in the context of the company’s flatfooted attempts to catch up with OpenAI, and Google’s stock price temporarily tanked.

Well, last week, it was OpenAI’s turn. In an ad published to mark the release of its new “reasoning” model o1, OpenAI showed a user uploading a picture of a birdhouse, and asking for advice on how to build a similar one.

On the face of it, the model’s answer was impressive. It spit out a long answer, complete with multiple steps and even advice on which safety gear to wear.

But look closely at the text, and it tells a very different story. The model only gave the dimensions for one wooden panel. It appeared to mislabel the dimensions for several other panels, instead labeling them as glue, sandpaper, and sealant. Even if you were to notice that mistake and cut some wood to the given dimensions, they would still be completely wrong for building the birdhouse at hand — or indeed anything useful at all. “You would know just as much about building the birdhouse from the image as you would the text, which kind of defeats the whole purpose of the AI tool,” the director of the Institute of Carpenters tells me. (OpenAI didn’t respond to a request for comment.)

P.S. I wouldn’t recommend reading this story as evidence that these tools all suck and will never be capable or dangerous. They are clearly highly capable already, as anybody who uses them regularly can attest. I think this story rather shows three things. 1) Capable =/= not flawed in basic unexpected ways. 2) Companies still aren’t checking their ads! And 3) that how dangerous an AI is isn’t necessarily correlated with its capabilities. A stupid AI, if people choose to blindly trust its outputs or delegate to it unsupervised power, can be extremely dangerous too. Before we get to superintelligent AI, we’ll have dumb AIs to which people and companies delegate far too much trust and power. In fact, that’s already happening.

Programming note

I’m now back at work after a few months off spent traveling the world. It was great fun. You can now expect to resume receiving these emails at a semi-regular but still entirely unpredictable cadence. Thanks for reading!