A Scoop on U.S. AI Policy

A report, commissioned by the State Department, makes the case for urgent and unprecedented AI regulation

Welcome back to Billy’s Newsletter. Today, I’m giving you an exclusive look at a fascinating piece of AI policy news out of the U.S.

The U.S. Must Move ‘Decisively’ To Avert ‘Extinction-Level’ Threat from AI, a Government-Commissioned Report Says

Even before the release of ChatGPT, some officials in the U.S. government were growing increasingly worried about the risks posed by AI.

In a meeting with the State Department in late 2021, two AI experts — Jeremie Harris and Mark Beall — convinced a group of U.S. officials that, despite AI’s meager abilities at that point in time, its rate of progress should be ringing alarm bells. The pair argued that AI capabilities were rising on an exponential upward curve and could soon reach dangerous levels. The meeting, which has not previously been reported, led the State Department to commission a detailed report on the national security risks posed by advanced AI. In November 2022 Harris and Beall’s company Gladstone AI, was awarded the $250,000 public contract. Last month, Gladstone delivered the finished report to the government.

I got my hands on the 247-page report last week, and today we’re publishing an exclusive look at it in TIME. Its findings are stark. The report says the U.S. government must move “quickly and decisively” to avert what it says are substantial national security risks stemming from advanced AI. The technology could, in the worst case scenario, cause an “extinction-level threat to the human species,” the report says.

The report’s policy proposals are even starker. It recommends sweeping and unprecedented new measures that go far beyond the U.S. government’s current AI policy. The recommendations include outlawing the training of AI models above a certain compute threshold, and making it illegal to open-source the weights of sufficiently capable models. (The State Department did not respond to my requests for comment. The first page of the report says its contents “do not reflect the views” of the U.S. government.) There are many more such recommendations. Those of you with strong stomachs for AI policy can read the full story in TIME here:

Beall, one of the report’s three coauthors, is a former Defense Department official. He has since quit Gladstone to start a super PAC that aims to make AI safety and security “a key issue in the 2024 elections.” The PAC, called Americans for AI Safety, officially launches today.

There are other notable details in the report, too. In preparing it for the State Department, the authors spoke with more than 200 government officials, experts, and employees at the world’s top AI labs including OpenAI, Google DeepMind, Meta, and Anthropic. Those employees tend to be very circumspect about sharing their fears with journalists. But many of them privately shared significant concerns with the authors about the safety of their work and the incentives driving their company leaders, according to the report.

Are you one of these people? Want to share your concerns in more detail with a journalist who gets it? My Signal username is billyperrigo.01 and I can protect your identity.Some key allegations emerge in these sections of the report: that AI labs face an economic incentive to prioritize speed over safety; take cybersecurity insufficiently seriously to prevent foreign espionage; don’t put in place protections strong enough to contain a rogue AI; and “game” evaluations that are meant to certify models as safe. Now, it should be noted that not only are these employees cited anonymously, but the report also does not specify which exact lab (or labs) they work at, making it hard to know exactly what to take away from the claims. OpenAI, Google, Meta and Anthropic didn’t respond to my requests for comment, and I can hardly blame them, since at least as far as employees’ concerns go, the report pins nothing concrete on any of them. For what it’s worth, all of these companies say their AI research is safe and responsible.

Still, the anonymous accounts offer a rare unvarnished look inside the top AI labs, indicating that, at least among some workers there, anxieties are rising. “We have served, through this project, as a de-facto clearing house for the concerns of frontier researchers who are not convinced that the default trajectory of their organizations would avoid catastrophic outcomes,” Harris, the CEO of Gladstone AI and one of the co-authors of the report, told me. “The level of concern … is difficult to overstate.”

You can read my full breakout report on those employees’ fears in TIME here:

Billy’s view

I’m going to take a leaf out of Semafor’s book here and share briefly how I think you should interpret this news.

Let’s address the elephant in the room: Isn’t this science fiction? Is this a real thing we should be worried about? Look, I don’t know, and I don’t think anyone has enough evidence to be certain. But there’s plenty of evidence that AI capabilities are reliably increasing in line with the amount of computing power used to train those systems, (the correlation is so clear it’s known as the scaling laws,) and we know that, as a result, the leading tech companies are now racing to train the next generation of systems using orders of magnitude more computing power than the last. Might the correlation between scale and capabilities taper off? Maybe. Might it continue? Maybe. In this climate, it seems sensible to think about contingency plans.

But many people in the press, and on Twitter, see the idea of AI posing an existential risk to humanity as an industry ploy. Stoking fears about the so-called ‘x-risk’ of advanced AI, this idea goes, is just a way for tech bros to boost their companies’ stock market valuations and avoid talking about existing flaws in their products, like bias and copyright infringement. There an element of truth to this.

But the Gladstone report shows the limits of this line of thinking. The leaders of the top AI labs, and the Big Tech companies bankrolling them, aren’t particularly worried about existential threats from AI. In fact, they are businessmen who are optimistic (and self-interested) enough to believe that the benefits of scaling up AI systems outweigh the risks. Raising fears about how their technology might wipe out humanity, and pushing for regulations like the ones in the Gladstone report, for them, would be a terrible business strategy. Which explains why they’re (largely) not the ones doing it. Reasonable people can disagree on the likelihood of future AI posing an existential risk, but it’s time to squash the myth that it’s a Big Tech conspiracy.

Recommended reading

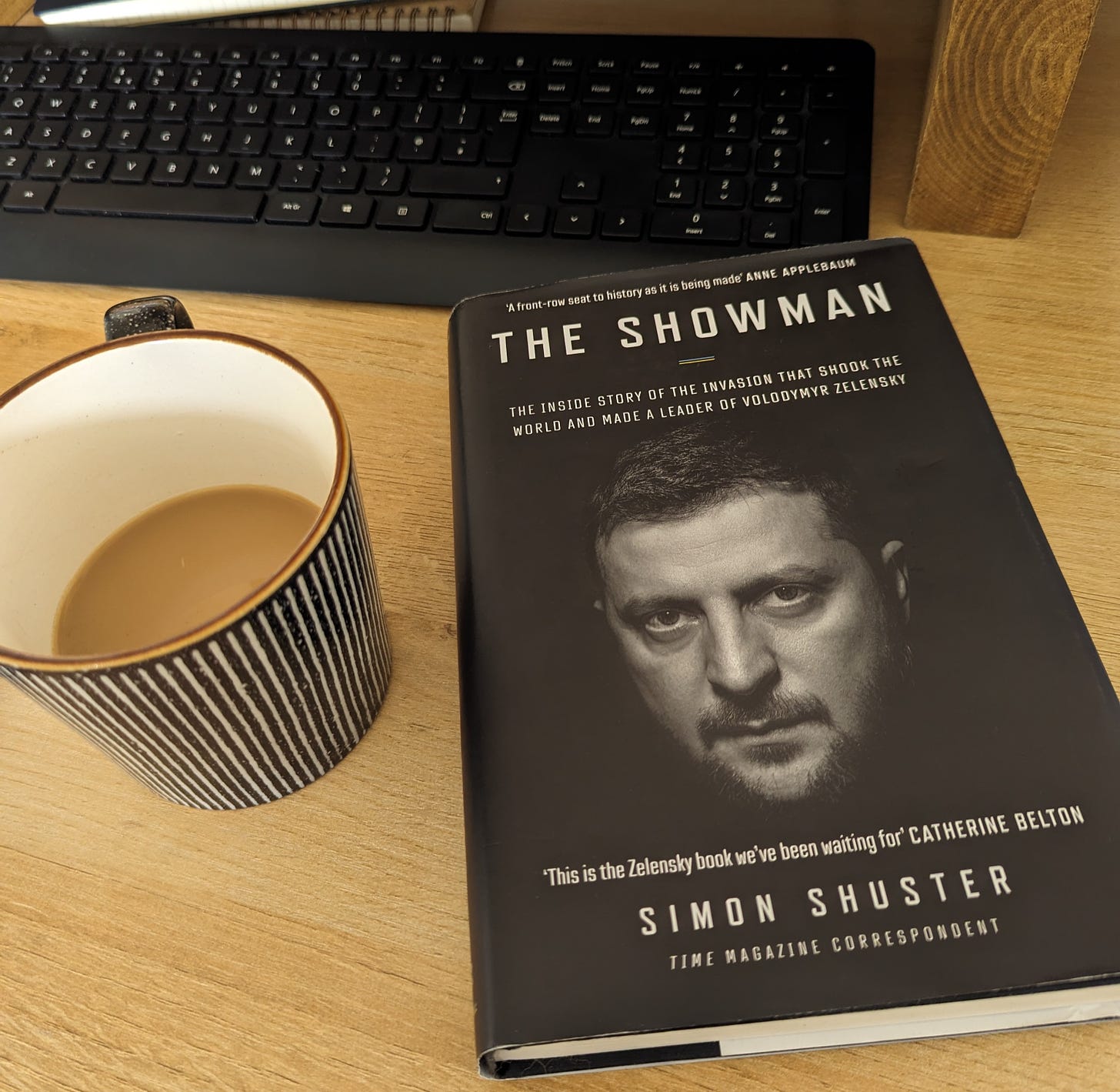

I recently finished my colleague Simon Shuster’s excellent book The Showman about Ukrainian President Volodomyr Zelensky. Simon has spent months in Kyiv chronicling the Russian invasion, interviewing Zelensky and his aides in their underground bunker, and accompanying the President to the frontlines. He has better access to Zelensky than any other journalist, which is reason enough to read the book. But he’s also just an all-round brilliant reporter, writer and thinker. I can’t recommend his book enough. (The Showman by Simon Shuster.)

My friend Andrew Chow, fellow tech correspondent at TIME, has just started a newsletter. (Not sure where he got that idea.) He too has a book coming out, later this year, about the batshit insane world of crypto. I’m very much looking forward to reading it, but before then, he’ll be publishing weekly installments on Substack to whet the appetite. You should join me in subscribing. (Read Andrew’s Substack and preorder his book Cryptomania.)

That’s all folks. I’ll be back in your inboxes soon. If you liked the scoop above, please consider sharing it in a tweet, forwarding this email to a colleague, or printing out the webpage and handing it to a random person on the street.

Correction

The version of this email that hit inboxes misstated who attended the meeting with State Department officials on behalf of Gladstone in 2021. It was Jeremie Harris and Mark Beall, not Jeremie Harris and his brother Edouard.

This is a good opportunity to say that, on the hopefully rare occasions that I have to make corrections in this newsletter, I’ll do so publicly and above board. That’s a standard journalistic procedure, and I hope it makes you trust that what you read here is accurate.